With today's big data, it's hard to make sense of information from different sources. Traditional Relational AI Graphs (RAG) were a step forward in knowledge representation, helping systems map and store relationships between textual data points. However, today’s digital ecosystem is rich in various modalities—images, documents, videos, and more. It is where Multimodal RAG steps in, offering a transformative approach that combines multiple data types into a unified, intelligent framework.

As organizations strive to make better decisions, understand customer behavior, and enhance automation, multimodal reasoning becomes critical. This post explores what Multimodal RAG is, the key benefits it provides, and how to implement it effectively—especially using tools like Azure Document Intelligence.

What Is Multimodal RAG?

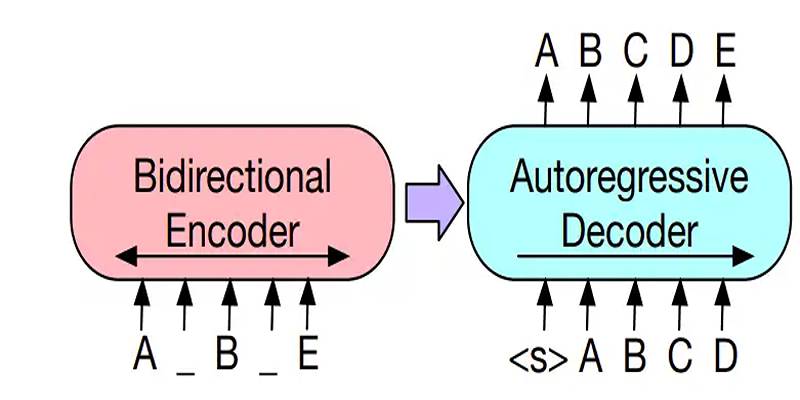

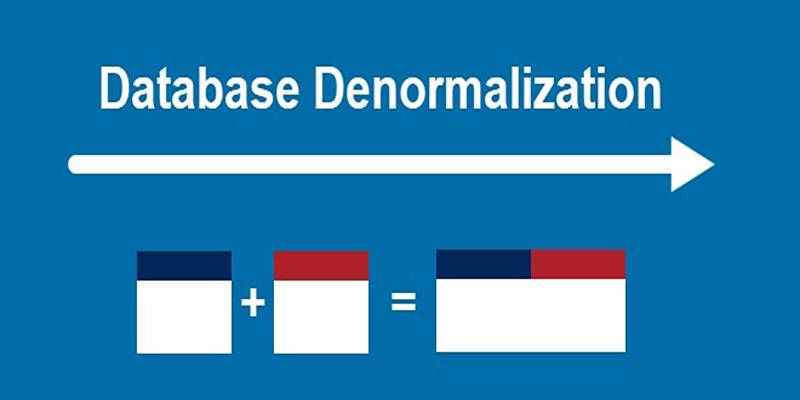

To understand Multimodal RAG, we must first look at RAG itself. A Relational AI Graph (RAG) is a structure where information is stored as nodes (entities) and edges (relationships between entities). This format allows for the modeling of complex real-world scenarios, such as user interactions, product purchases, or social connections, in a graph structure.

A Multimodal RAG, however, expands this foundation. It doesn't rely solely on text or structured databases. Instead, it incorporates multiple data types—images, scanned documents, audio clips, tables, or structured records—and combines them intelligently. It makes it possible to build deeper, more context-aware knowledge graphs that reflect reality with greater accuracy.

For example, instead of just linking a customer to a transaction record, a multimodal RAG can also include the scanned invoice, product images, and even related audio support tickets—creating a 360-degree view of the customer interaction.

Why Multimodality Matters?

In real-world applications, data doesn’t come in a single format. A legal firm might have signed contracts (PDFs), handwritten notes (images), and digital communications (text). In healthcare, radiology images, lab results, and clinical notes must be analyzed together. Relying on a text-only model leads to a loss of insight.

By integrating multiple data sources, a multimodal system extracts richer insights, improves accuracy in relationship mapping, and supports advanced use cases like automated question answering and intelligent search. Multimodality brings contextual depth. It doesn’t just recognize that two items are related—it understands how and why they are, which is key for decision-making and reasoning.

Key Benefits of Multimodal RAG

The evolution from unimodal to multimodal RAGs offers significant value across industries. Some of the most compelling benefits include:

Improved Entity Recognition

When systems analyze both textual and visual data, they can identify entities with far greater accuracy. A name mentioned in a text field can be verified against an ID in a scanned image, minimizing errors in identity resolution.

Enhanced Relationship Extraction

Relationships that are unclear in the text may be clarified through visuals or structured tables. For example, a scanned receipt may contain information about payment methods that complement transaction logs, giving a complete picture of a customer's purchase.

Comprehensive Knowledge Graph Construction

With access to more varied data, knowledge graphs become more detailed and representative of real-world scenarios. Multimodal RAGs offer higher recall and precision in graph construction, aiding intelligent applications like recommendation systems or digital assistants.

Context-Rich Decision-Making

Decisions based on multimodal insights are more accurate. For instance, in fraud detection, analyzing image data alongside transaction logs can help uncover document tampering or forged invoices that text-only systems would miss.

Azure Document Intelligence: Empowering Multimodal RAG

To implement Multimodal RAG effectively, organizations need a robust system for ingesting and analyzing multimodal data. Azure Document Intelligence, formerly known as Azure Form Recognizer, provides this foundation.

It offers tools for:

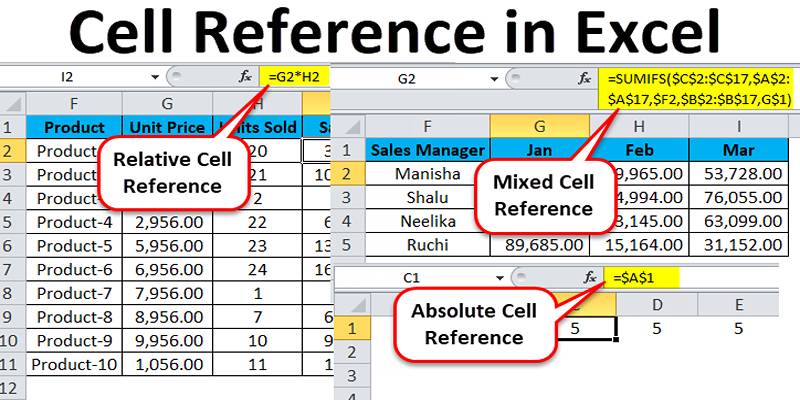

- Extracting structured data from PDFs, images, and scanned documents

- Named Entity Recognition (NER) to identify key fields like names, dates, amounts

- Optical Character Recognition (OCR) to convert visual content into usable data

- Key Phrase Extraction (KPE) to detect important concepts and relationships

- QnA Maker for querying knowledge across formats in natural language

Azure Document Intelligence bridges the gap between raw, unstructured data and structured RAG-compatible formats, making it a cornerstone of multimodal system development.

Implementation Strategy: Building Multimodal RAG Step-by-Step

Building a Multimodal RAG is a structured process that requires careful planning and the right tools. Here's a step-by-step overview of how organizations can implement it using Azure’s ecosystem.

Step 1: Data Preparation

Collect multimodal data from relevant sources—documents, databases, forms, spreadsheets, and images. Azure’s parsing tools and OCR capabilities help standardize this data for further processing.

Step 2: Entity and Relationship Detection

Use Azure’s NER and KPE models to extract entities (like people, products, and dates) and relationships (like ownership, transactions, and hierarchy) across different data formats. It lays the foundation for constructing the knowledge graph.

Step 3: Graph Modeling and Integration

Construct the knowledge graph using the extracted nodes and edges. Integrate multimodal embeddings and metadata into the graph using graph libraries and AI frameworks like PyTorch Geometric or Neo4j, depending on the complexity and use case.

Step 4: Evaluation and Refinement

Validate your model using labeled test data. Refine entity matching, relation extraction, and data ingestion pipelines iteratively. Azure tools allow automated re-training and scaling based on performance metrics.

The Road Ahead: Future of Multimodal RAG

As AI advances, Multimodal RAGs are poised to become even more powerful. We can expect:

- Greater use of foundation models and LLMs to support dynamic question-answering over complex graphs

- Integration with real-time data streams for real-time recommendations or alerts

- Domain-specific multimodal models trained for healthcare, finance, legal, and other verticals

- Stronger safety and governance through explainable AI, ensuring transparency in how graphs are constructed and used

The potential to unify all forms of enterprise data under one intelligent graph makes Multimodal RAG a future-proof solution for data-driven innovation.

Conclusion

Multimodal RAG is not just an upgrade—it’s a paradigm shift in how we connect and understand data. Integrating text, images, and structured data into a unified graph enables more accurate analytics, richer insights, and smarter automation. Tools like Azure Document Intelligence make implementation accessible, even for complex enterprise environments. From fraud detection to drug discovery, the benefits are real, immediate, and transformative. As the technology evolves, so too will the opportunities for organizations that embrace it.