Performance and speed are very important in data-driven applications because they determine how well a system works. With increasing volumes of data and rising user demands for real-time access, developers often look beyond the traditional practices of database normalization to meet performance goals. One such performance-boosting strategy is denormalization.

Denormalization is a widely used technique in database management, especially when systems prioritize read performance and reporting efficiency. While normalization aims to eliminate data redundancy and promote integrity, denormalization accepts a level of controlled redundancy for the sake of performance. This post will provide a comprehensive understanding of denormalization, including its objectives, advantages, trade-offs, and best practices for implementation.

Understanding Denormalization

Denormalization is the process of deliberately introducing redundancy into a previously normalized database structure to optimize query performance. It involves combining tables or adding redundant data fields to reduce the complexity of queries, especially in read-intensive environments.

While normalization structures data across multiple related tables to eliminate duplication and ensure consistency, denormalization merges or flattens data structures. It helps in reducing the number of joins needed in queries, which, in turn, enhances speed and system responsiveness.

Advantages of Denormalization

Denormalization offers several benefits that make it appealing in performance-critical systems. Below are the main advantages:

Improved Query Performance

Denormalization reduces the need for complex joins by consolidating related data. It significantly improves query response time, especially when retrieving large datasets or running frequent read operations. It’s particularly beneficial in applications with high data volume and stringent performance expectations.

Simplified Query Logic

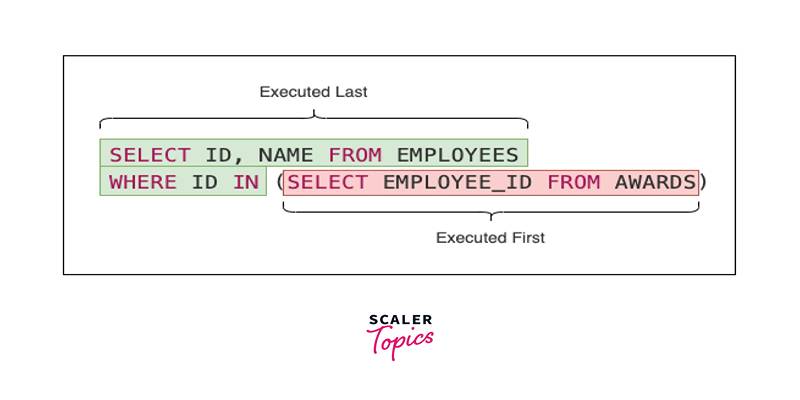

With fewer tables and reduced dependencies, queries become easier to write, maintain, and understand. Developers and analysts can craft SQL queries that are more straightforward, leading to increased productivity and reduced debugging time.

Lower Database Load

By minimizing the number of joins and aggregations during queries, denormalization reduces the processing burden on the database server. It leads to improved resource utilization, less locking, and better concurrency, especially during high-traffic periods.

Enhanced Reporting and Analytics

Denormalized schemas support faster generation of reports by reducing the need for on-the-fly aggregations. Summary data, totals, or frequently accessed values can be stored directly within the schema, enabling swift analytics operations without recalculating values repeatedly.

Faster Data Access

Denormalized structures make frequently needed data readily available within a single table. It minimizes lookup operations and improves performance for read-heavy applications where speed is critical.

Trade-Offs and Considerations

While denormalization provides clear performance advantages, it comes with trade-offs that must be carefully evaluated. Improper implementation can lead to serious data management challenges.

Increased Redundancy

The most apparent downside of denormalization is the duplication of data. Redundant data can cause storage bloat and increase the risk of inconsistencies, especially when the same data is updated in multiple places.

Complex Data Maintenance

Maintaining data consistency in a denormalized structure requires additional effort. Updates and deletions must be carefully propagated across redundant fields to avoid discrepancies. It increases the operational complexity of the database.

Higher Storage Requirements

Storing redundant data increases the overall size of the database. While storage costs have decreased over time, the implications of inefficient data management can be significant in systems dealing with very large datasets.

Impact on Write Operations

Denormalization may negatively affect writing performance. Every insert, update, or delete operation could require multiple changes across different fields or tables, resulting in slower transaction times and higher latency during write-heavy operations.

Data Inconsistency Risks

Without robust validation and synchronization mechanisms, denormalization can lead to mismatched or outdated data. It is particularly dangerous in systems where accurate data is critical for operations or compliance.

When to Use Denormalization

Denormalization should not be used indiscriminately. It is most beneficial when applied in environments that meet the following conditions:

- Read-Heavy Workloads: Systems that perform many reads compared to writes can benefit significantly from reduced query complexity.

- Performance Bottlenecks: If joins and aggregations are slowing down query performance, denormalization can help streamline operations.

- Complex Reporting Needs: Applications that rely heavily on reporting and analytics often benefit from summary tables or pre-aggregated values.

- Real-Time Data Access: In scenarios where immediate access to data is essential — such as dashboards or real-time feeds — denormalization provides faster retrieval.

- Frequent Data Access Patterns: If specific data is accessed repeatedly in the same format, storing it redundantly can reduce load and boost speed.

It’s important to analyze the data access patterns and query logs before implementing denormalization to ensure it aligns with actual performance needs.

Techniques for Denormalization

Denormalization is implemented using a variety of structural changes and design choices, depending on the database schema and performance objectives. Common techniques include:

- Merging Tables: Combining related tables into one to eliminate the need for joins.

- Adding Redundant Columns: Storing frequently accessed or calculated values within a table to avoid recalculation.

- Creating Summary Tables: Pre-aggregated tables that store totals, averages, or counts for faster querying.

- Storing Derived Data: Including fields for calculated metrics such as percentages, rankings, or statistical summaries directly in the database.

Each technique should be chosen based on its impact on performance, maintainability, and data consistency.

Conclusion

Denormalization is a strategic tool in the database designer’s toolkit, especially when addressing performance issues in read-heavy environments. By intelligently introducing redundancy, developers can significantly improve query speed, simplify analytics, and reduce system overhead.

However, it must be implemented with care. The trade-offs — such as increased maintenance, storage, and risk of inconsistencies — require a clear understanding of system requirements and usage patterns. When applied thoughtfully and monitored continuously, denormalization can transform a sluggish database into a high-performing system tailored to modern demands.