Language models are reshaping how humans interact with machines. From drafting content to answering customer inquiries, these AI tools have become integral in both casual and professional settings. Two names often dominating the conversation are GPT-4, developed by OpenAI, and Llama 3.1, the latest offering from Meta. Both promise powerful natural language capabilities, but how do they compare when placed side by side?

This article provides a clear, user-friendly comparison between GPT-4 and Llama 3.1. We'll look at their unique strengths, architectural differences, and where each shines best. You will know which AI model fits your goals better by the end.

Design Philosophy and Architecture

While both models are transformer-based, their design choices reflect different priorities.

GPT-4: Focused on Versatility and Safety

GPT-4 prioritizes versatility. With a unified API, it can be used for a wide array of applications, from casual conversation to enterprise-level analysis. GPT-4 excels at understanding nuance, performing reasoning tasks, and generating fluent, context-aware responses.

It includes various safeguards and alignment layers to improve factual accuracy and mitigate harmful outputs. However, being closed-source means its architecture, training data, and parameter count remain confidential.

Llama 3.1: Built for Customization and Scale

Llama 3.1 features a standard decoder-only transformer, forgoing complex expert mixture models to ensure stable training and ease of use. It also supports a massive 128K context window, enabling it to handle long documents and complex prompts without losing track of the context.

Its open-source status allows developers to experiment, optimize, and train the model for domain-specific tasks, a major plus for advanced users who need full control over their AI tools.

Capabilities and Strengths

GPT-4

- Natural Conversations: Fluent, engaging, and capable of handling long interactions.

- Creative Output: Strong performance in generating poetry, fiction, and storytelling.

- Multilingual Support: Understands and generates content in many languages.

- Tool Use: Integrates well with plugins and external APIs.

- Context Awareness: Maintains coherence even over extended dialogues.

Llama 3.1

- High Accuracy: Excellent performance in question answering and summarization tasks.

- Long Contexts: Efficiently handles very long prompts and documents.

- Multilingual Proficiency: Offers support for 8+ languages with high reliability.

- Fine-Tuning Friendly: Easily customized for specific industries or domains.

- Open Ecosystem: Freely available for integration, training, and research.

Performance Comparisons

Performance is one of the most critical benchmarks when comparing large language models. Both GPT-4 and Llama 3.1 show remarkable strength across general use cases, but differences emerge in how they handle language understanding, reasoning, and multi-step tasks.

General Language Understanding

GPT-4 still leads in generalized performance and context handling, especially in open-ended tasks. It produces nuanced responses, recognizes tone, and performs well across a variety of knowledge domains.

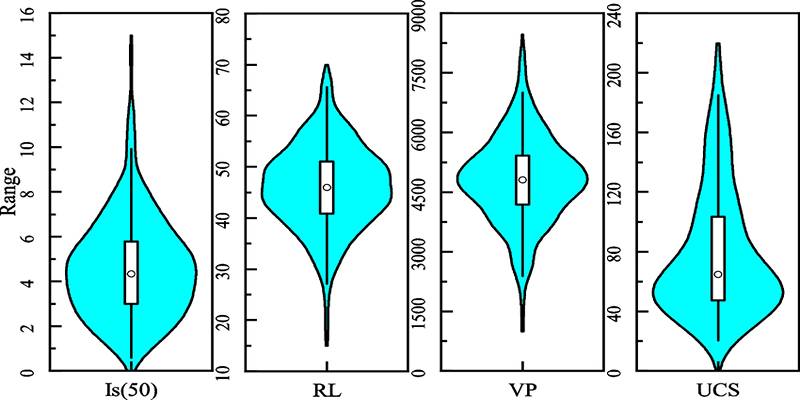

Llama 3.1 comes close in many benchmarks, particularly for its size. It offers competitive performance on benchmarks like MMLU and ARC, especially the 70B and 405B models. Its training efficiency also makes it a strong contender in real-time and embedded AI systems.

Reasoning and Comprehension

Both models perform well in logical reasoning. GPT-4 tends to outperform in multi-step reasoning tasks due to its broader context window and extensive tuning for complex queries.

Llama 3.1, though lighter in structure, performs surprisingly well in math, code generation, and fact-based queries when fine-tuned. It benefits from its transparent training structure and adaptability.

Multimodal Capabilities

While GPT-4 has demonstrated multimodal abilities, including processing text and images, Llama 3.1 primarily focuses on text-based tasks.

- GPT-4: GPT-4’s multimodal nature allows it to accept both text and image inputs, making it useful for applications like visual question answering, diagram analysis, and image captioning. This positions GPT-4 as a more versatile tool in areas where understanding both visual and textual context is important. Developers can leverage this functionality in platforms that require richer media input, such as educational tools and accessibility-focused applications.

- Llama 3.1: Currently, Llama 3.1 is designed strictly for text-based input and output. While Meta has mentioned potential future enhancements to enable multimodal processing, the current focus remains on optimizing natural language understanding and generation. This means Llama 3.1 may not yet serve scenarios that require visual context, but it excels in focused NLP tasks like summarization, translation, and code generation. Its leaner architecture allows for faster deployment where text processing is the primary concern.

Model Size and Scalability

Understanding the parameter sizes and scalability options of both models is crucial for deployment considerations.

- GPT-4: OpenAI has not disclosed the exact number of parameters in GPT-4, but estimates place it well above 175 billion. As a result, GPT-4 requires considerable computational power for both training and inference. It’s typically accessed through OpenAI’s cloud-based API, which means users must rely on OpenAI’s infrastructure rather than self-hosting. This can limit flexibility for enterprises needing full on-premise control, but ensures consistent performance and scalability across high-volume use cases.

- Llama 3.1: Meta has openly released Llama 3.1 in three sizes: 8B, 70B, and 405B parameters. This variety enables developers to choose the best fit for their hardware capabilities and use cases. The 8B version is suitable for local or edge deployments, while the 405B model competes with GPT-4 in high-performance tasks. This level of transparency and modularity makes Llama 3.1 ideal for organizations that prioritize flexibility, cost management, or custom fine-tuning on specific infrastructure.

Conclusion

The competition between GPT-4 and Llama 3.1 reflects the evolving landscape of AI language models. GPT-4 provides an unmatched plug-and-play experience, making it ideal for businesses and casual users who value quality and convenience. Llama 3.1, on the other hand, brings flexibility, transparency, and innovation to the hands of developers and researchers who want deeper control.

As AI tools become more embedded in daily life, the choice between these two will likely come down to one core question: do you want a ready-made solution or a fully customizable engine? Either way, both models represent the pinnacle of current AI innovation and are pushing the field into exciting new territory.