Businesses use artificial intelligence (AI) to make better decisions as it enhances operational efficiency and generates innovative solutions in their operations. Many organizations that seek to benefit from AI face substantial challenges during large-scale implementation efforts. This article identifies the unique barriers that force enterprises to adopt AI while offering concrete solutions to help organizations implement AI properly.

The Promise and Challenges of Enterprise AI Adoption

Based on experts ' predictions, enterprises are currently showing heightened interest in  adopting AI systems, as their expenditures on generative AI technology will exceed $200 billion during the next five years. Companies now include AI systems as part of their business processes for customer support systems, predictive data analysis solutions, and supply chain resource optimization tools. The path to implementation requires multiple hurdles to overcome.

adopting AI systems, as their expenditures on generative AI technology will exceed $200 billion during the next five years. Companies now include AI systems as part of their business processes for customer support systems, predictive data analysis solutions, and supply chain resource optimization tools. The path to implementation requires multiple hurdles to overcome.

1. Data Integration Challenges

Problem:

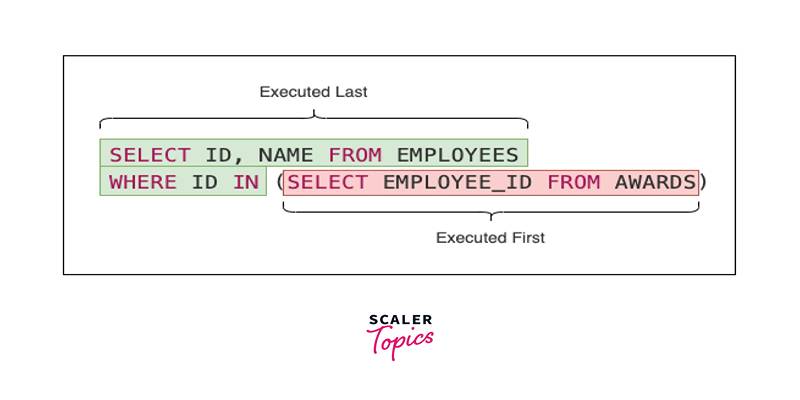

Enterprise AI adoption faces its biggest obstacle from deficient methods of combing data across multiple sources. Several organizations face difficulties because they manage unstandardized data from various sources within separate databases. Moreover, data pipelines lack connections, making obtaining significant insights challenging, resulting in incorrect predictions and poor decision outcomes.

Solution:

To overcome data integration challenges:

- A product approach should guide data management from creation until the end of its lifecycle to maintain product quality and accessibility.

- Advanced tools, including cloud-based platforms and data lakes, should be used to create a robust infrastructure that lets users centralize data storage and processing.

- Departments must follow established mapping protocols that specify how to clean and enrich data through standardization methods across the organization.

2. Talent Gap in AI Expertise

Problem:

Implicit in highly complex AI models are the fundamental requirement of specialized technical personnel to carry out development tasks, maintain the system, and resolve operational problems. Four out of every ten organizations (69%) have brought forth the inability to find suitable AI professionals in their workforces. The lack of qualified AI personnel delays project execution while organizations must constantly depend on outside provider services.

Solution:

To overcome their talent deficiency, organizations should consider the following:

- The organization should conduct AI training for its workforce through internal programs to fill skill deficiencies for AI work.

- The organization should collaborate with academic institutions to obtain prospective personnel skilled in emerging AI techniques and access to new research projects.

- For example, Appian provides simplified tools for developing AI-powered experiences through non-technical interfaces.

3. Ethical Concerns and Compliance Issues

Problem:

Technological systems built with artificial intelligence often trigger ethical problems concerning biased algorithms, privacy intrusions, and IP rights disputes. Implementation becomes more challenging due to different regional standards, which increase concerns about ethics and compliance requirements. Organizations experience substantial delays in deployment when they need to meet requirements such as GDPR or HIPAA compliance.

Solution:

To navigate ethical challenges:

- Enter into partnerships with technology suppliers who base their solutions on frameworks for ethical AI implementation.

- The organization should periodically evaluate its algorithms to verify ethical standards by identifying bias and unexpected outcomes.

- Encryption protocols and anonymization techniques are necessary to protect sensitive data. These should safeguard user privacy throughout model training processes.

4. Measuring ROI from AI Investments

Problem:

Enterprise leaders continue to face the challenge of proving ROI as an essential barrier to their adoption of large-scale AI systems. Many executives experience difficulties measuring generative AI technology investments' return on investment because almost fifty percent encounter substantial challenges with ROI calculation.

Solution:

To measure ROI effectively:

- Businesses must select valuable use cases that produce quantifiable outcomes, from cost reduction to revenue generation.

- Executives should measure success outcomes using specific KPIs, such as accuracy rates, time savings, and customer satisfaction scores.

- Projects should move through implementation stages, expanding after mastering the first deployment phase.

5. Overcoming Internal Resistance

Problem:

Problem:

Implementing AI depends on employees' willingness to embrace new technologies because resistance is among the principal challenges. Workers express dissatisfaction through system sabotage when they experience job insecurity because they lack satisfaction with AI tools. A study reveals that 41 percent of young employees across Millennium and Generation Z have purposely targeted their organizations' Artificial Intelligence plans.

Solution:

To reduce resistance:

- Employees should receive detailed information explaining that AI augments worker roles instead of taking them over.

- A selection of office "AI ambassadors" must receive formal authority to spread AI adoption while handling employee reservations as they arise.

- Employee workflows need better tools through the distribution of user-friendly platforms that integrate successfully with their work methods.

6. Siloed Development Efforts

Problem:

Enterprise development of generative AI happens independently between departments without cooperative efforts. The absence of cross-functional collaboration results in operational waste and failed integration possibilities between business sectors.

Solution:

To break down silos:

- IT departments should establish close partnerships with business units when developing applications through all developmental stages.

- The organization must establish strategic planning that aligns every department with shared AI adoption priorities.

- Integrative platforms such as JFrog ML create automated workflows that let different teams connect their DevOps functions to MLOps duties through dependable cooperation.

7. Addressing Cost Concerns

Problem:

Implementing AI projects demands considerable initial budget allocations because they need advanced infrastructure, technical tools, and well-developed staff expertise. CEO concerns focus on validating whether the investments produce outcomes matching financial requirements.

Solution:

To manage costs effectively:

- Organizations should implement solutions that scale according to business expansion rather than conducting complete system changes at once.

- Organizations should implement Azure Microsoft and AWS cloud platforms through their subscription-based services to adapt their budgets during their initial deployment stages.

- Vendors should be selected through a detailed analysis of future profitability instead of a simple price evaluation.

Conclusion

Deploying artificial intelligence in enterprises presents revolutionary opportunities and specific hurdles companies need to solve correctly. Organizations need to actively resolve data system integration problems, talent deficit problems, and ethical challenges to achieve successful implementation.

Generative AI technologies allow enterprises to maximize their value by establishing departmental cooperation, providing employee training, and implementing ethical guidelines while effectively ROI tracking.